1. A quick reminder on processes, threads, and CPUs

Before jumping straight into coroutines, I’d like to take a detour by going back the basics of how programs are executed. Hopefully this is something you already know all about, but recalling the general principles will prove useful later.

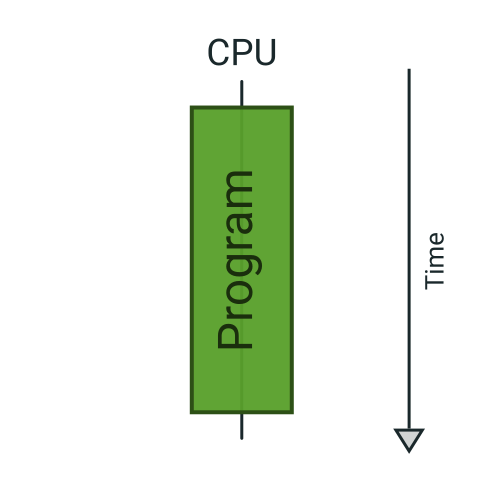

Sequential execution

A CPU executes instructions sequentially. The most simple representation of this is a (small) microcontroller : only one processing core, no OS, no “processes”, the CPU simply eats up instructions one by one, tirelessly, like Pacman eats those small yellow dots. It can go to sleep and resume later it if some special instruction asks it to, but it will never execute two instructions at the same time. (I’m leaving aside the mechanism provided by interrupts to keep things simple).

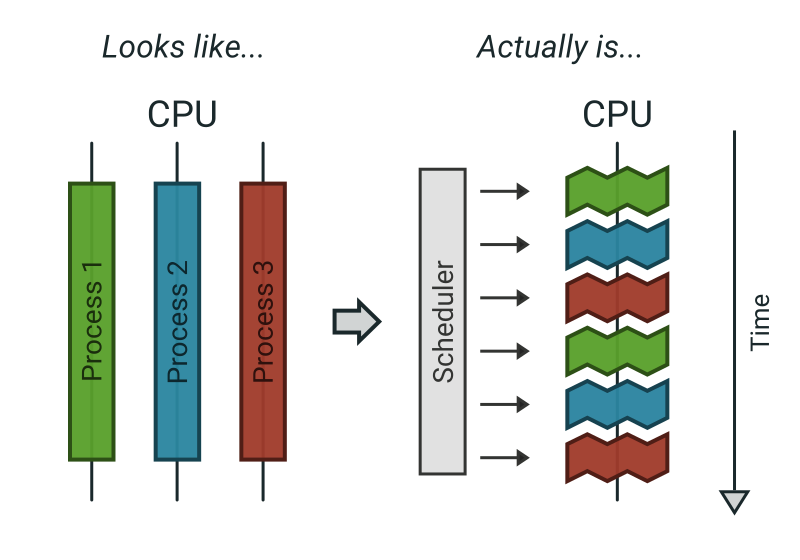

Multi-process and scheduler

On a more complicated system, such as a computer, you want to be able to procrastinate on YouTube while you pretend to work with your IDE open on the side; so, you want to have multiple processes running at the same time. Nowadays CPU chipsets have a bunch of cores working effectively in parallel, but you never have as many cores as processes running; so for this purpose, your OS implements a few tricks in order to allow you to have as many programs running as you’d like. Mainly, it implements time-sharing techniques with a preemptive scheduler : in other words, it creates the illusion of multiple processes running at the same time by sharing the CPU time among the running processes, switching quickly from one to the next, a mechanism known as scheduling. The key word here is “preemptive” : it means that the scheduler has complete control over which process is running and has the ability to forcefully interrupt and resume processes as it likes (in fact, processes don’t even realize when they are interrupted, the mechanism is completely hidden at their level). Keep this detail in mind as it will come back later.

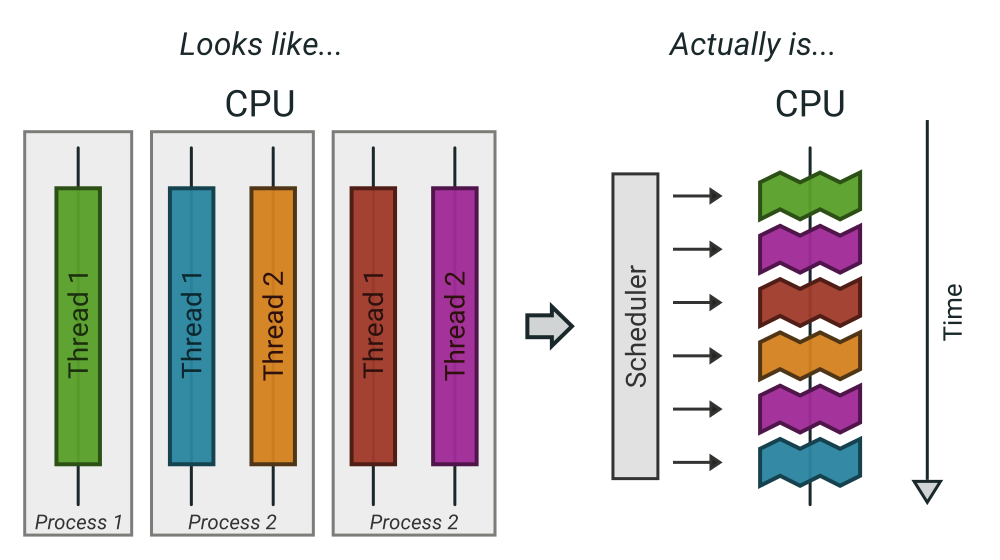

Threads

Threads are sort of “sub-processes”. They provide a way for a single process to have multiple parallel threads of execution, which can come in handy when your application logic requires you to do separate things at the same time (for example, you could have one thread that handles the UI of your application with a main event loop, while a background thread handles the application’s network connection to an API, something like that). So actually, the scheduler doesn’t schedule processes but threads, and an implicit “main” thread is automatically provided for processes that do not explicitly use multi-threading.

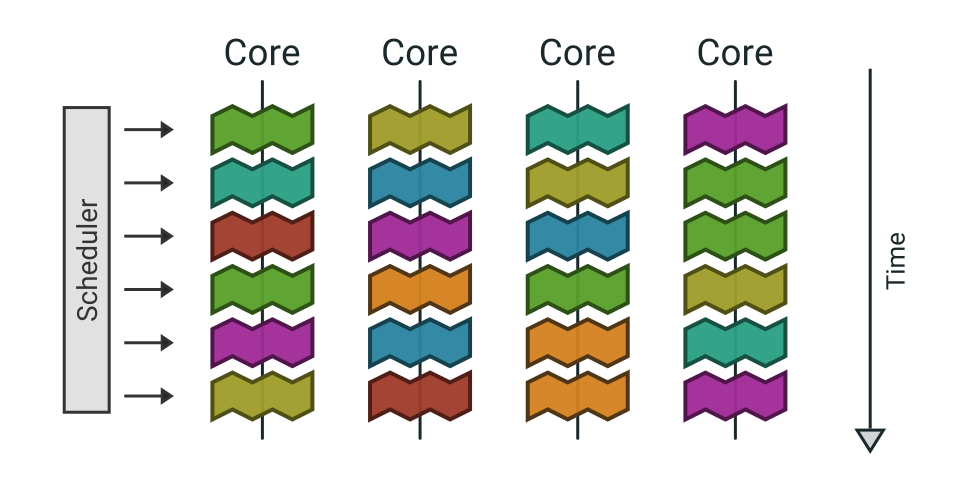

Cores

Finally, when you have a multi-core CPU, things are actually not much more complicated : it just means that the scheduler can run more than one thread at the same time (as many as there are cores), handling time-sharing slots independently for each core. A single thread will actually constantly switch from one core to the other, at the will of the omniscient algorithm of the scheduler; and, as already stated, without even knowing it.

This explanation a bit simplistic, but it is more than enough for our purpose here. Let’s talk about multitasking from a program’s (and programmer’s) point of view.

Very, very nice article! Many thanks!

It would be very nice to learn more about exception handling in coroutines!

Great article. I love that your examples also cover blocking calls like Thread.sleep and how specifying the dispatcher helps in this case. This would have helped me a lot when I first started working with coroutines!

This is by far one of the BEST introductions to coroutines I’ve read. I’ve shared this with my ream at work.

Thank you for putting this together. The diagrams are amazing! I love how you build intuition, I’ve been wanted to put something like this together for some time but I don’t think I could have done such a good job.

Small nitpick:

In this sentence:

>> “it would only require to change launch() for another coroutine builder : withContext()”

Calling withContext() a coroutine *builder* can be misleading, as doesn’t actually create a new coroutine, it just changes the scheduler (or context should I say). https://pl.kotl.in/T-XZv31xL

>> “This function suspends the current coroutine while the code inside its block is executed.”

Again, there’s only one coroutine 🙂 just in a different context. And the coroutine doesn’t suspend get suspended when calling `withContext` (might suspend later tho)

Hi Fernando, thanks for the comment and the suggestions !

I checked again the doc of withContext and indeed, I had misunderstood its behavior. I think I was mislead by the part that says “suspends until it completes”, which at first I thought meant that the current coroutine was suspended and implicitly that a new one was created. I fixed that paragraph and I hope it is now correct, but please let me know if you see some mistake.

Dude you’re amazing, your prose is completely intuitive and easy to follow. NOW I can read that damned documentation and make sense of it.

Thank you slicia.io for the explanation